By Rowland Manthorpe, technology correspondent

Police are deciding who to target with facial recognition using criteria so broad a senior MP says it could include "literally anybody", Sky News can reveal.

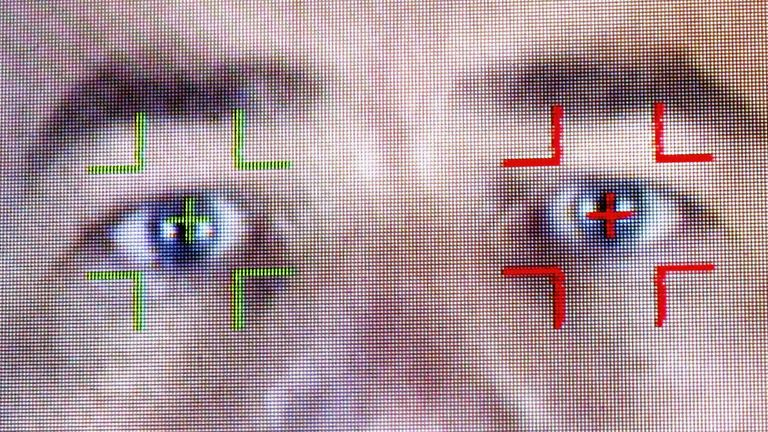

Automatic facial recognition technology scans faces in a crowd before comparing the results to a "watchlist" – a list of people who have had photos of their faces entered into the system by police.

According to internal documents uncovered by Sky News, South Wales Police – the force leading the Home Office-backed trial of the controversial technology – places "persons where intelligence is required" on its watchlists, alongside wanted suspects and missing people.

Former cabinet minister David Davis told Sky News such "extraordinarily wide-ranging" criteria were probably unlawful.

"That could be anybody, literally anybody," Mr Davis said. "As a level of justification it is impossible to test. You couldn't go to a court and say: 'Can you judge whether this is right or wrong?'

Advertisement

"You're going to use this facial recognition to arrest people, to follow them, to keep data on them, to intrude on their privacy – all of this with no real test of the sort of person you're looking for."

Police forces have defended the use of facial recognition by saying it could be used to catch terrorists and serious criminals.

More from Science & Tech

South Wales Police told Sky News its watchlists were proportionate and necessary, and that anyone on a watchlist was there for a specific policing purpose.

According to the internal document, watchlists typically contain 500 to 700 images taken from the giant police custody bank of 450,000 photos, which is collected from sources including CCTV, bodyworn cameras and social media.

The images may include photos gathered when someone was found innocent of a crime, as South Wales Police decided it was "impracticable at this stage to manually remove unconvicted custody images from AFR Locate watchlists".

:: Listen to the Daily podcast on Apple Podcasts, Google Podcasts, Spotify, Spreaker

South Wales Police is currently being challenged in a judicial review brought by human rights organisation Liberty, which claims facial recognition technology breaches fundamental human rights.

"The use of secretive watchlists, which can be filled with people who aren't suspected of wrongdoing, is one of many alarming ways in which the police disregard our rights when using this invasive mass surveillance tool," said Hannah Couchman, policy and campaigns officer at Liberty.

"This authoritarian technology also violates the privacy of everyone who passes the cameras – taking their biometric data without their consent and often without their knowledge. It has no place on our streets – it belongs in a dystopian police state."

Earlier this month, Sky News revealed the results of an independent evaluation of the Metropolitan Police's use of the exact same technology, which is provided by Japanese technology firm NEC. It found that the system had an error rate of 81%, meaning four in five matches were incorrect.

The evaluation also found issues with Metropolitan Police watchlists, warning of "significant ambiguity" and an "absence of clear criteria for inclusion".

There were also problems with keeping the watchlists up-to-datRead More – Source

[contf] [contfnew]

Sky News

[contfnewc] [contfnewc]