Often, when new iOS jailbreaks become public, the event is bittersweet. The exploit allowing people to bypass restrictions Apple puts into the mobile operating system allows hobbyists and researchers to customize their devices and gain valuable insights that may be peeking under the covers. That benefit is countered by the threat that the same jailbreak will give hackers a new way to install malware or unlock iPhones that are lost, stolen, or confiscated by unscrupulous authorities.

Friday saw the release of Checkm8. Unlike just about every jailbreak exploit released in the past nine years, it targets the iOS bootrom, which contains the very first code that's executed when an iDevice is turned on. Because the bootrom is contained in read-only memory inside a chip, jailbreak vulnerabilities that reside there can't be patched.

Checkm8 was developed by a hacker who uses the handle axi0mX. He's the developer of another jailbreak-enabling exploit called alloc8 that was released in 2017. Because it was the first known iOS bootrom exploit in seven years, it was of intense interest to researchers, but it worked only on the iPhone 3GS, which was seven years old by the time alloc8 went public. The limitation gave the exploit little practical application.

Checkm8 is different. It works on 11 generations of iPhones, from the 4S to the X. While it doesn't work on newer devices, Checkm8 can jailbreak hundreds of millions of devices in use today. And because the bootrom can't be updated after the device is manufactured, Checkm8 will be able to jailbreak in perpetuity.

I wanted to learn how Checkm8 will shape the iPhone experience—particularly as it relates to security—so I spoke at length with axi0mX on Friday. Thomas Reed, director of Mac offerings at security firm Malwarebytes, joined me. The takeaways from the long-ranging interview are:

- Checkm8 requires physical access to the phone. It can't be remotely executed, even if combined with other exploits

- The exploit allows only tethered jailbreaks, meaning it lacks persistence. The exploit must be run each time an iDevice boots.

- Checkm8 doesn't bypass the protections offered by the Secure Enclave and Touch ID.

- All of the above means people will be able to use Checkm8 to install malware only under very limited circumstances. The above also means that Checkm8 is unlikely to make it easier for people who find, steal or confiscate a vulnerable iPhone, but don't have the unlock PIN, to access the data stored on it.

- Checkm8 is going to benefit researchers, hobbyists, and hackers by providing a way not seen in almost a decade to access the lowest levels of iDevices.

Read on to find out, in axi0mX's own words, why he believes this is the case:

Dan Goodin: Can we start with the broad details? Can you describe at a high level what Checkm8 is, or what it is not?

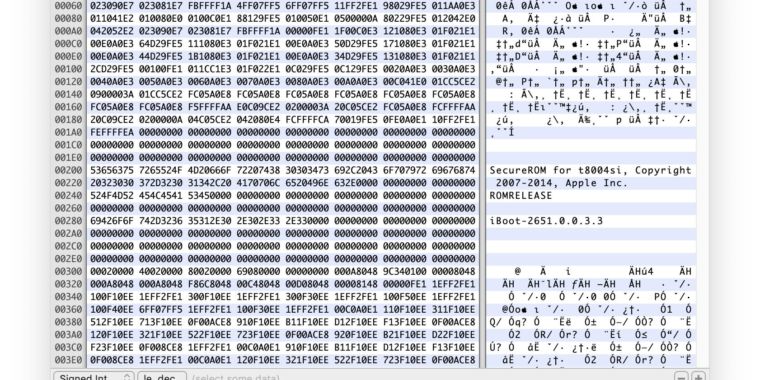

axi0mX: It is an exploit, and that means it can get around the protection that Apple built into the bootrom of most recent iPhones and iPads. It can compromise it so that you can execute any code at the bootrom level that you want. That is something that used to be common years ago, during the days of the first iPhone and iPhone 3G and iPhone 4. There were bootrom exploits [then] so that people could jailbreak their phone through the bootrom and that later would not be possible.

The last bootrom exploit that was released was for iPhone 4 back in 2010, I believe by Geohot. After that, it was not possible to exploit an iPhone at this level. All the jailbreaks [that] were done later on [happened] once the operating system boots. The reason that bootrom is special is it's part of the chip that Apple made for the phone. So whatever code is put there in the factory is going to be there for the rest of its life. So if there is any vulnerability inside the bootrom, it cannot be patched.

Persistence and Secure Enclave

DG: When we talk about things that aren't patchable, we're talking about the bug. What about the change to the device itself? Is that permanent, or once the phone is rebooted, does it go back to its original state?

A: This exploit works only in memory, so it doesn't have anything that persists after reboot. Once you reboot the phone… then your phone is back to an unexploited state. That doesn't mean that you can't do other things because you have full control of the device that would modify things. But the exploit itself does not actually perform any changes. It's all until you reboot the device.

DG: In a scenario where either police or a thief obtains a vulnerable phone but doesn't have an unlock PIN, are they going to be helped in any way by this exploit? Does this exploit allow them to access parts of this phone or do things with this phone that they couldn't otherwise do?

A: The answer is "It depends." Before Apple introduced the Secure Enclave and Touch ID in 2013, you didn't have advanced security protections. So, for example, the [San Bernardino gun man's] phone that was famously unlocked [by the FBI]—the iPhone 5c— that didn't have Secure Enclave. So in that case, this vulnerability would allow you to very quickly get the PIN and get access to all the data. But for pretty much all current phones, from iPhone 6 to iPhone 8, there is a Secure Enclave that protects your data if you don't have the PIN.

My exploit does not affect the Secure Enclave at all. It only allows you to get code execution on the device. It doesn't help you boot towards the PIN because that is protected by a separate system. But for older devices, which have been deprecated for a while now, for those devices like the iPhone 5, there is not a separate system, so in that case you could be able to [access data] quickly [without an unlock PIN].

DG: So this exploit isn't going to be of much benefit to a person who has that device [with Secure Enclave] but does not have the PIN, right?

A: If by benefit you mean accessing your data, then yes, that is correct. But it's still possible they might have other goals than accessing your data, and in that case, it's possible they would get some benefit.

DG: Are you talking about creating some sort of backdoor that once the owner puts in a PIN it would get sent to the attacker, or a scenario like that?

A: If, say, for example, you leave your phone in a hotel room, it's possible that someone did something to your phone that causes it to send all of the information to some bad actor's computer.

DG: And that would happen after the legitimate owner returned and entered their PIN?

A: Yes, but that's not really a scenario that I would worry much about, because attackers at that level… would be more likely to get you to go to a bad webpage or connect to a bad Wi-Fi hotspot in a remote exploit scenario. Attackers don't like to be close. They want to be in the distance and hidden.

In this case [involving Checkm8], they would have to physically hold your device in their hand and would have to connect a cable to it. It requires access that most attackers would like to avoid.

This attack does not work remotely

DG: How likely or feasible is it for an attacker to chain Checkm8 to some other exploit to devise remote attacks?

A: It's impossible. This attack does not work remotely. You have to have a cable connected to your device and put your device into DFU mode, and that requires you to hold buttons for a couple seconds in a correct way. It's something that most people have never used. There is no feasible scenario where someone would be able to use this attack remotely.

If you want to talk [about] really hypothetical situations, if you're a jailbreaker and you're trying to use your exploit on your own computer and somehow your computer is compromised, it's possible someone on your computer is going to deliver a different version of the exploit that does more stuff than what you want to do. But that is not a scenario that's going to apply to most people. That is a scenario that is simply not practical.

Thomas Reed: Does the bootrom code that's loaded into RAM get modified by the exploit, or is that not a requirement? Through this vulnerability, would you need to make modifications to the bootrom code that's loaded into RAM, or would that not be a factor? Would that not be involved in the way the exploit works? I'm under the assumption that some of the code from the bootrom is loaded into RAM when it's executed. Maybe I'm wrong about that.

A: The correct answer is that it's complicated. The code that is used by the bootrom is all in read-only memory. It doesn't need to get copied in order for it to be used. In order for my device to be able to do what I want, I want to also inject some custom code. In that case, I can't write my code into the read-only memory, so my only option is to write it into RAM or, in this case, SRAM—which is the low-level memory that is used by the bootrom—and then have my injected code live in this small space. But the actual bootrom code itself does not get copied in there. It's only the things that I added to my exploit.

TR: Can this be used to install any other code, any other programs that you wanted, with root-level permissions, so that you could install malware through this?

A: The correct answer is "It depends." When you decide to jailbreak your phone using this exploit, you can customize what Apple is doing. Apple has some advanced protections. A lot of their system is set up so that you don't have malware running. If you decide to jailbreak, you're going to get rid of some of the protections. Some people might make a jailbreak that keeps a lot of those protections, but it also allows you to remove protections. Other people might remove all protections altogether.

The jailbreak that you can make with this exploit always requires you to exploit the device fresh after reboot. So if you don't use the exploit, your device will only boot to a clean install [version] of iOS. It's not like you can install malware once and then have it stay forever if you're not using the exploit because iOS has protections against that.

More about persistence

DG: Somebody could use Checkm8 to install a keylogger on a fully up-to-date iOS device, but the second that they rebooted the phone, that keylogger would be gone, right?

A: Correct. Or it wouldn't work. They left the keylogger there, but iOS would just say: "This app is not authorized to run on this phone, so I'm not going to run it."

iOS devices have what's called a secure bootchain. Starting from the bootrom, every single step is checked by the previous stage so that it is trusted. It always has a signature verified so that the phone only allows you to run software that is meant to be running. If you choose to break that chain of trust and run software that you want to run, then exactly what you do will determine what else can happen. If you choose to not break the chain of trust and you simply use your phone the way that Apple wants you to use it, without jailbreaking it, then this chain of trust is secure. So malware will not be able to get around it the next time you boot your phone, because you are relying on the chain of trust.

You cannot actually persist using this exploit. The only way that you can break the chain of trust is if you manually do it every boot. So you have to be in DFU mode when you boot, and then you have to connect a cable to your phone, and then you have to run the exploit in order to jailbreak your phone. At that point you can do whatever you want. But in no case will that be the case if you… just boot normally. In that sense, it is not persistent.

TR: In the case of a company like Cellebrite or Greyshift getting your device and they want to capture data from it, as I understand it if you don't have the key—which you wouldn't because it's in the Secure Enclave—a lot of the data is going to be encrypted, and it's not going to be accessible. It sounds like Checkm8 really wouldn't be of much use to them. Is that correct, or would there be some things that they could do with it?

A: As a standalone exploit, the answer is "No, they can't do much with it." But it's possible, perhaps likely, that they would use more than one exploit—they have an exploit chain—in order to do what they want to do. And in that case, they could use this one instead of another one that they have because maybe it's faster, maybe they don't have to worry about protecting it. So it's possible that this could serve as a step that they take in order to crack the PIN code.

This does not give them anything that would directly be able to guess the PIN code without other exploits. I don't know what they have. It's possible that they just have one thing that they use, and in that case, they probably would not use this in any way. But it's also possible that this could replace one of the bugs that they use in order to do whatever they're doing.

TR: I think the appeal of that would be that it's something that Apple can't patch. If they had an exploit chain that would give them access to a lot of devices.

DG: So this is more of an incremental development [fRead More – Source